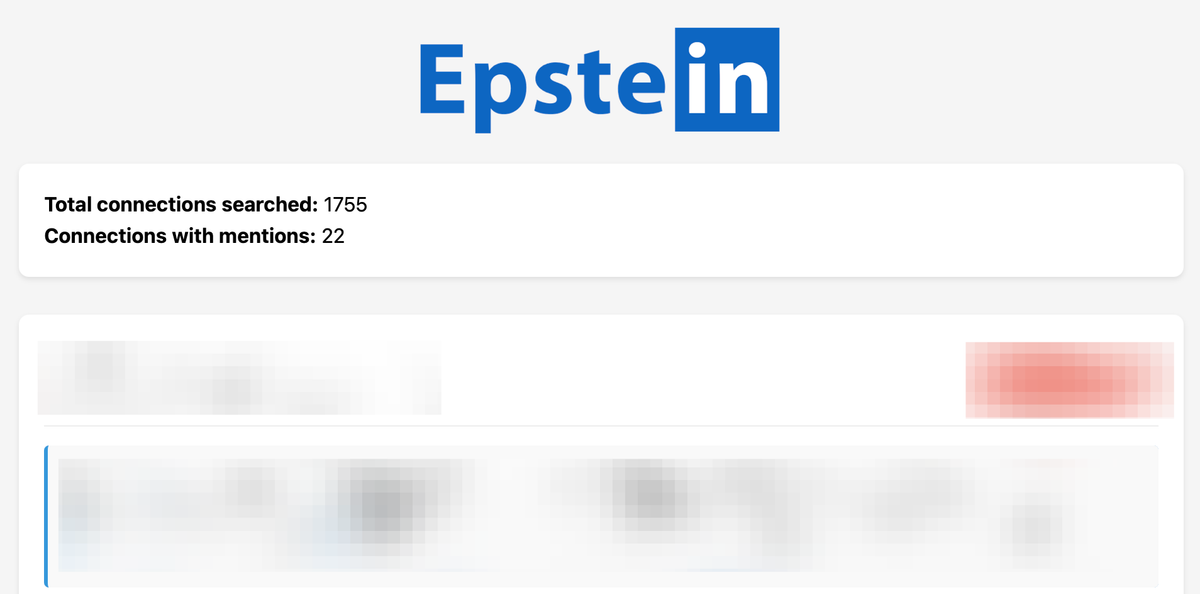

A new tool searches your LinkedIn connections for people who are mentioned in the Epstein files, just in case you don’t, understandably, want anything to do with them on the already deranged social network.

404 Media tested the tool, called EpsteIn—as in, a mash up of Epstein and LinkedIn—and it appears to work.

“I found myself wondering whether anyone had mapped Epstein’s network in the style of LinkedIn—how many people are 1st/2nd/3rd degree connections of Jeffrey Epstein?” Christopher Finke, the creator of the tool, told 404 Media in an email. “Smarter programmers than me have already built tools to visualize that, but I couldn’t find anything that would show the overlap between my network and his.”

- Archive: http://archive.today/AIkL2

- Github: https://github.com/cfinke/EpsteIn

Is there a tool that crunches the entirety of the documents and sorts the individual words by frequency? For example, doing it the stupid way (semi-manually) I copied OP’s article into Word and replaced every space with a page break to turn the entire article into a one-word-per-line list, then plugged that into Excel and sorted alphabetically, then manually counted and deleted the repeats. Then sorted those to put the most frequent on top.

This reduced the 525 word article down to a list of 284 individual words. If I added another article to this list, the number of entries would only be increased by the number of words in the 2nd article that didn’t appear in the first one, so basically as more and more articles are added, the number of unique additions from each would be fewer and fewer. Do this to a thousands-of-pages of documents like the Epstein files, and you could instantly condense like dozens of pages worth of just the word “the” down to a single entry, making the entirety of the documents much easier to skim for highlights… like, if the word ‘velociraptor’ was just randomly hidden in the article, most readers would probably skim right passed it; but in the list below it would stand out like a sore thumb, prompting a targeted search in the full document for context. Especially if we could flag words as not interesting, and like click to knock “the” “of” “and” etc off the list.

…maybe a project for someone who actually knows what they’re doing… my skills hit a brick wall after things like ‘find and replace’ in Word, but you get the gist.

Seriously, if you’re motivated enough to do this, you should give programming a try. Python or Ruby or Javascript are ideal for this kind of thing, and you can solve problems like this in a few lines of code… just look up “word frequency in Python” or whatever language for examples.

If you want to see what the next level of this kind of analysis looks like, watch a few videos about how Elasticsearch works… not so much so you can USE Elasticsearch (although you can, it’s free), but just to get a sense of how they approach problems like this: Like imagine instead of just counting word occurrences, you kept track of WHERE in the text the word was. You could still count the number of occurrences, but also find surrounding text and do a bunch of other interesting things too.

There’s probably a nice shell multiline command that does what you want lol. cat + awk unique count + sort

I’m just forgetting is there’s an easy way to keep the line numbers or filename so you can easily go back to the full page reference.