Unavailable at source.

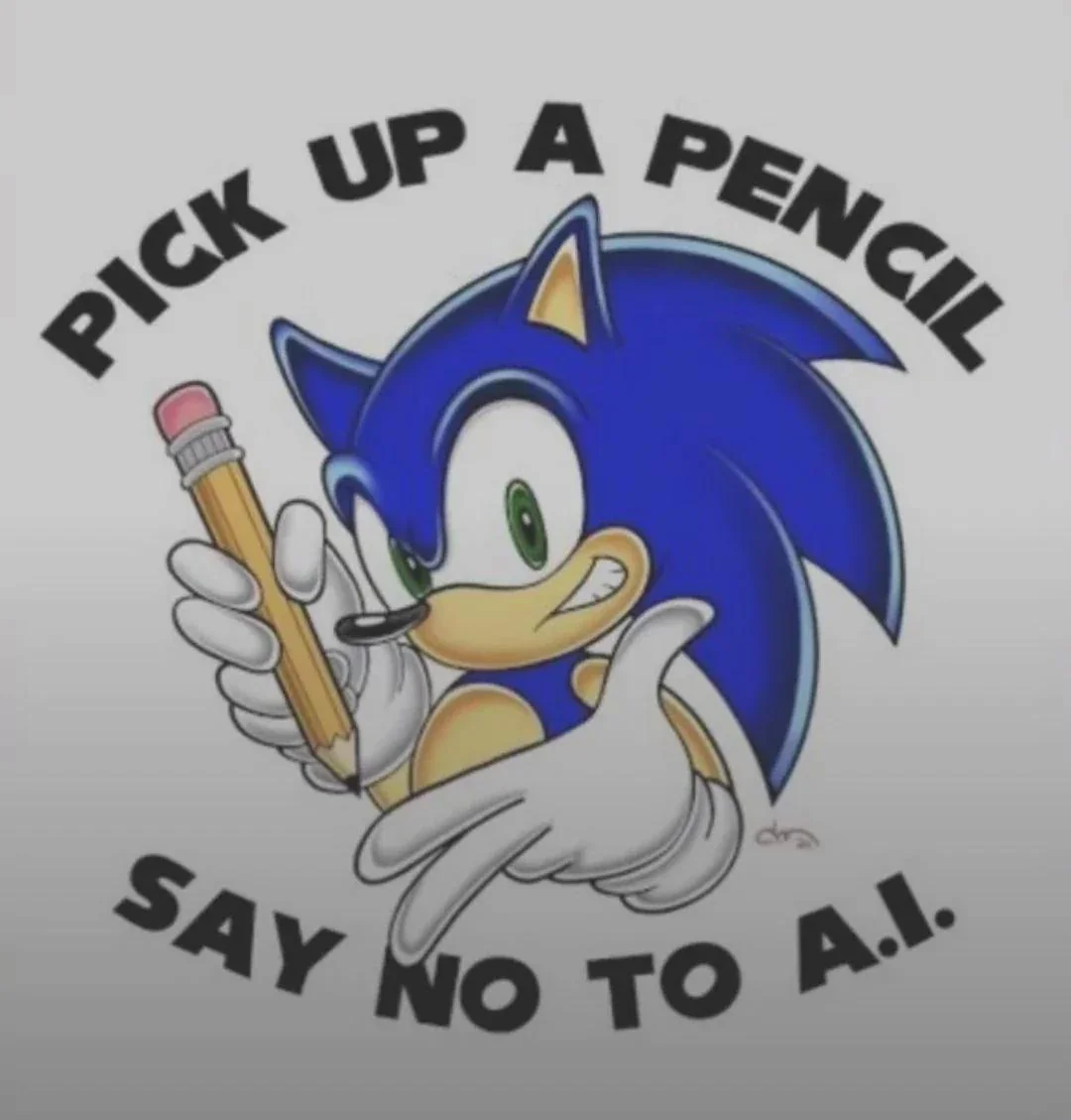

THROW IT BACK!

LLM impression

You’re absolutely right! — 🤖 When people tell each other to ask AI language models instead of answering the damn question, or at least continuing the conversation in a productive manner—perhaps with follow-up questions—they isolate themselves from those around them. It’s not just foolish—it’s actively antisocial! 🙁

I actually can’t tell.

The emojis overuse is spot-on.

Ah yes, just consult the misinformation vortex anytime you need the answer to anything.

It amazes me that you all loathe AI search but will happily deal with regular search which always has given you garbage. AI search just makes it easier to start checking sources and going through larger amounts of material.

AI search is only useful because of crippled search engines prioritizing making money over usefulness

It makes up sources all the time. And when it does give a legitimate source, it makes up a quote or hallucinates nonexistent detail from it. Use your brain, not AI.

Regular search wasn’t always garbage. We used to be able to use symbols to refine our searches and would be able to find exactly what we are looking for within seconds. Random part number for a specific model? Random debug issue? Fuckin weird-ass error on the software?

Now days you can search for a string which you fuckin know exists and it’s like, “there’s nothing on the web for that.” absolute fuckin lies. The shit is still indexed somewhere.

It’s because AI gives you complete bollocks which looks like a correct answer complete with made-up sources.

One gives you shit, the other gives you shit that looks like tasty food.

Plus normal search is not creating the biggest financial bubble in modern history while destroying the environment

Remember back when you could buy RAM? Those were good times.

Which is why you click on the links, read and verify. This just provides organization and context to verify and to dig in to vs a list and summary. You have to validate and read either way. LLM/RAGs just provide tools on top.

Yeah, so essentially just doing a regular google search but with more unnecessary steps starting with a dogshit chat bot.

Which AI are you talking about? There isn’t just one AI, it’s an entire category of technology.

Just asking an LLM to answer a question a give you sources would be an incompetent way to use an LLM. The models will happily hallucinate anything that they don’t have an answer for.

But most AI systems that are setup for searching and research use Retrieval Augmented Generation. There are non-AI parts of the system handle the document retrieval and source list preparation. The LLM only uses the reference tags and then a completely non-AI system creates the source list. The LLM can’t hallucinate a source list because no competently designed system would trust the LLM to not hallucinate when they could simply program a system to handle that aspect without wasting LLM tokens on simple to solve problems.

So, for example, if you were to ask ask, ‘Who won the 1984 Olympics’ the system does a search of documents (or websites, as here) and then passes the results to the LLM which only summarizes the documents that were given to it in response to the user’s question.

so we are using the “regular search which has always given you garbage” and taking that garbage automatically to get summarised by the hallucinator and we are supposed to trust the output somehow?

No, you don’t trust the output. You shouldn’t trust the output of search either. This is just search with summarization.

That’s why there are linked sources so that you can verify yourself. The person’s contention was that you can’t trust citations because they can be hallucinated. That’s not how these systems work, the citations are not handled by LLMs at all except as references, the actual source list is entirely a regular search program.

The LLM’s summarization and sources are like the Google Results page, they’re not information that you should trust by themselves they are simply a link to take you to information that’s responsive to your search. The LLM provides a high level summary so you can make a more informed decision about which sources to look at.

Anyone treating LLMs like they’re reliable is asking for trouble, just like anyone who believes everything they read on Facebook or cite Wikipedia directly.

Search didn’t used to give “output”. It used to give links to a wide variety of sources such as detailed and exact official documentation. There was nothing to “trust”.

Now it’s all slop bullshit that needs to be double checked, a process that frankly takes just as long as finding the information youself using the old system, and even that still can’t be trusted in case it missed something.

Search didn’t used to give “output”. It used to give links to a wide variety of sources such as detailed and exact official documentation. There was nothing to “trust”.

If you search on Google, the results are an output. There’s nothing AI about the term output.

You get the same output here and, as you can see, the sources are just as easily accessible as a Google search and are handled by non-LLM systems so they cannot be hallucinations.

The topic here is about hallucinating sources, my entire position is that this doesn’t happen unless you’re intentionally using LLMs for things that they are not good at. You can see that systems like this do not use the LLM to handle source retrieval or citation.

Now it’s all slop bullshit that needs to be double checked, a process that frankly takes just as long as finding the information youself using the old system, and even that still can’t be trusted in case it missed something.

This is true of Google too, if you’re operating on the premise that you can trust Google’s search results then you should know about Search Engine Optimization (https://en.wikipedia.org/wiki/Search_engine_optimization), an entire industry that exists specifically to manipulate Google’s search results. If you trust Google more than AI systems built on search then you’re just committing the same error.

Yes, you shouldn’t trust things you read on the Internet until you’ve confirmed them from primary sources. This is true of Google searches or AI summarized results of Google searches.

I’m not saying that you should cite LLM output as facts, I’m saying that the argument that ‘AIs hallucinate sources’ isn’t true of these systems which are designed to not allow LLMs to be in the workflow that retrieves and cites data.

It’s like complaining that live ducks make poor pool toys… if you’re using them for that, the problem isn’t the ducks it’s the person who has no idea what they’re doing.

so I fail to see why I should be using an LLM at all then. If I am going to the webpages anyway, why shouldn’t I just use startpage/searx/yacy/whatever?

Yeah, if you already know where you’re going then sure, add it to Dashy or make a bookmark in your browser.

But, if you’re going to search for something anyway. Then why would you use regular search and skim the tiny amount of random text that gets returned with Google’s results? In the same amount of time, you could dump the entire contents of the pages into an LLM’s context window and have it tailor the response to your question based on the text.

You still have to actually click on some links to get to the real information, but a summary generated from the contents of the results is more likely to be relevant than the text presented in Google’s results page. In both cases you still have a list of links, generated by a search engine and not AI, which are responsive to your query.

You are just speaking to a brick wall. It’s taking all the jobs AND garbage. Can’t be a tool in between that has pros and cons.

AI “search”. Give me a fucking break.

👌👍

Vulgar Displai of Power

I’m adding “AI Bros” to the list of people it is morally acceptable to punch at any time. Current list: Nazis, AI Bros.

That venn diagram might as well be a circle

You are experiencing fear-based psychosis. Please seek professional help before it’s too late and you hurt yourself or a loved one.

Shut up, AI bro.

Internet tough guy shit that’ll never happen for 1000 Alex.

Some information is not digitized, and not all digitized information has been accurately processed by a machine. This might also be relevant: https://stopcitingai.com/

I got a colleague who has delegated thinking to ChatGPT. When I talk to him I could as well talk directly to ChatGPT

Borg.

The Borg actually cared about information being factual.

I think this google search explains the topic pretty well: https://gprivate.com/6k1ci

Are they asking if they know how to hide a body?

Yes 🤣 Something i bet AI would be good at, tbf

That’s what he get for pissing off a saiyan.

Thank you! Let’s hope he’ll get the brain in place and reconsider his limited life time and missed opportunities for achievements…